1. 安装cuda-driver

https://blog.csdn.net/qq_20492405/article/details/79034430

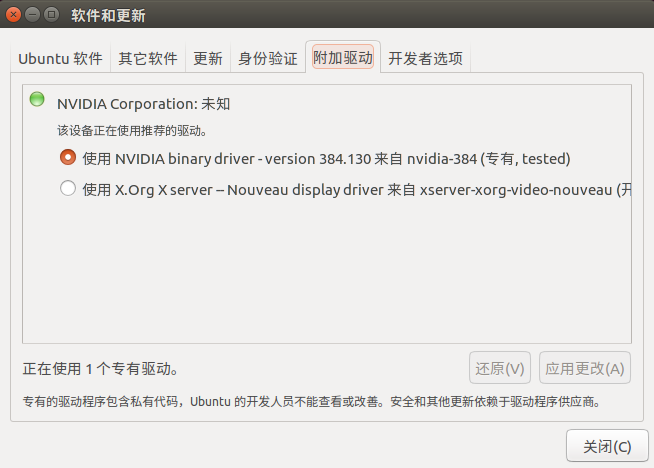

setting 选择软件和更新,附加驱动

2. 安装cuda-toolkit、cudnn等

2.1 cuda-toolkit

https://developer.nvidia.com/cuda-80-ga2-download-archive,使用deb方式安装,完成后给~/.bashrc增加如下内容:

1 | export CUDA_HOME=/usr/local/cuda |

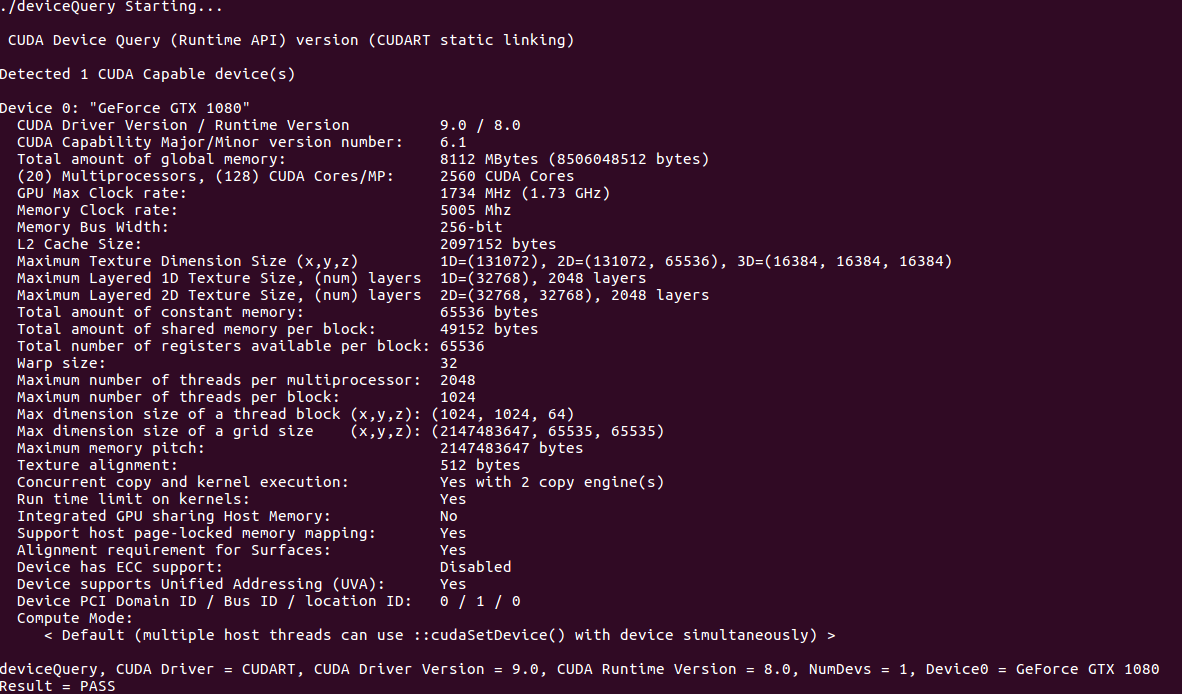

编译cuda-example

1 | cd /usr/local/cuda/samples |

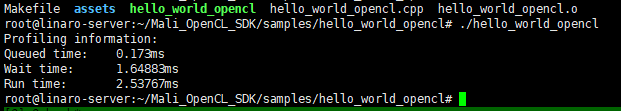

如下是结果:

2.2 cudnn

https://developer.nvidia.com/cuda-80-ga2-download-archive

安装cuda8.0-cudnn-7.1.4 deb,默认会安装到/usr/local

检查结果1

2

3

4# 检查cudnn版本

cat /usr/include/cudnn.h | grep CUDNN_MAJOR -A 2

# 检查cuda-toolkit版本

nvcc --version

3. 必需软件安装

1 | # terminal模拟器,相比gnome-terminal支持各种横向切分、纵向切分,使用方便,linux版本的"iTerm" |

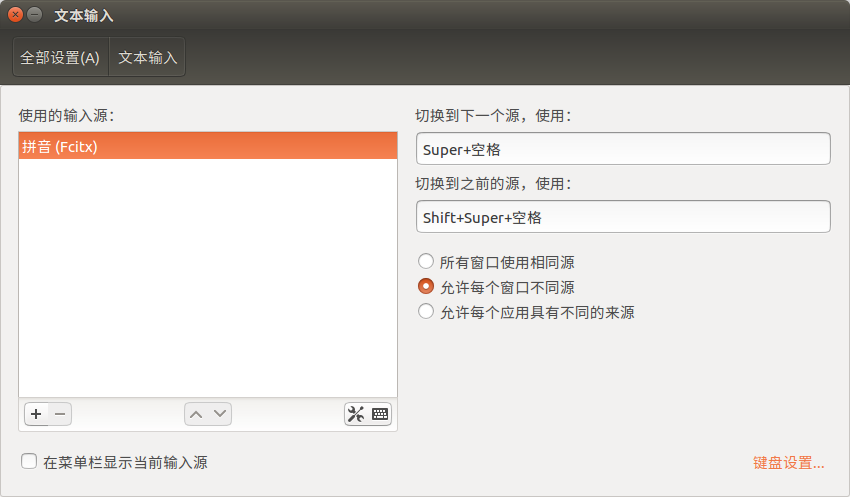

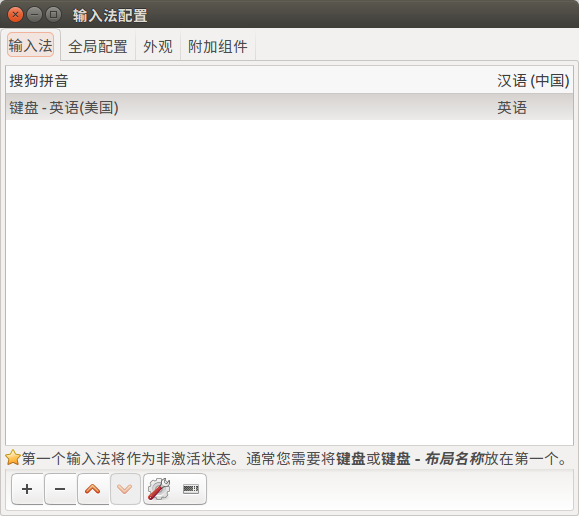

安装搜狗输入法后设置方法:

- 设置=>文本输入 只保留fcitx

- 在全局搜索框中查找fcitx配置,只保留搜狗输入和英语

4. 配置VNC远程访问

参考第一步 https://www.cnblogs.com/xuliangxing/p/7642650.html

5. Shadowsocks-Qt5

1 | sudo add-apt-repository ppa:hzwhuang/ss-qt5 |

完成后使用Chrome,安装Proxy SwitchyOmega插件并配置